The Invisible Editors of the Internet

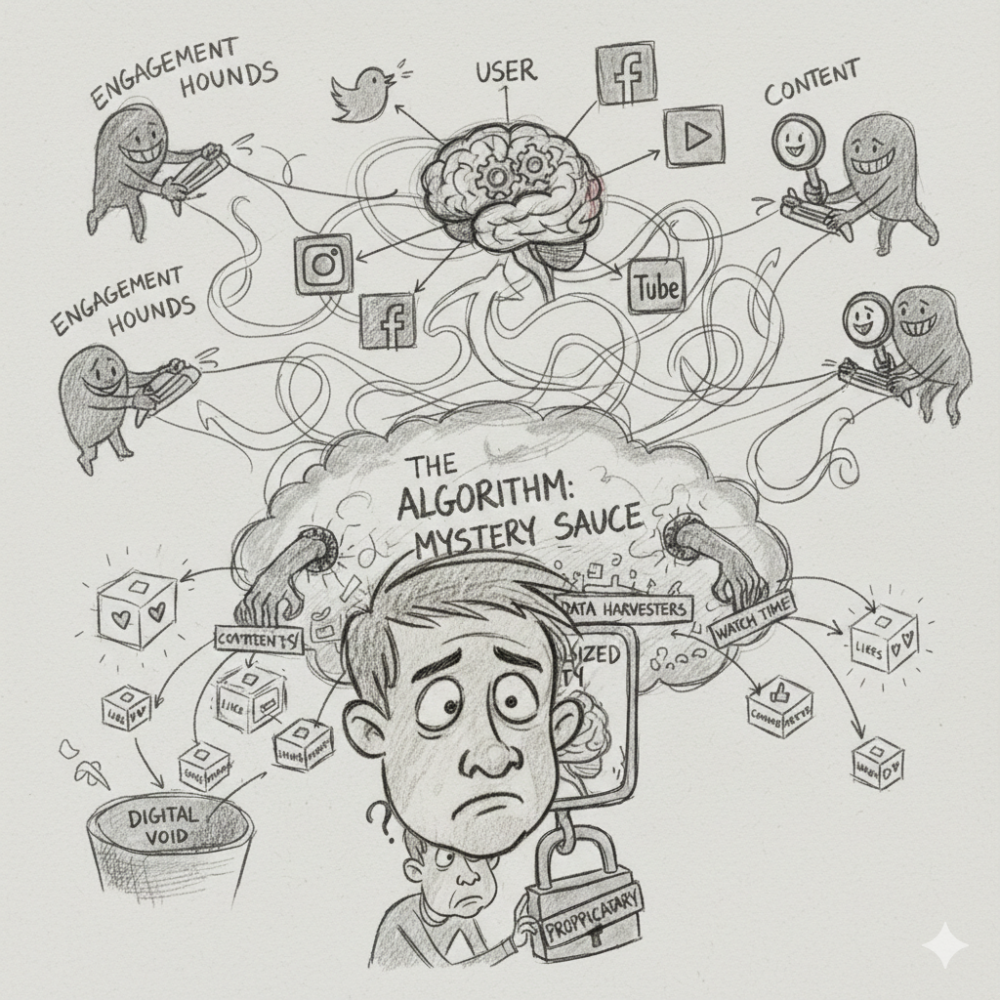

Every swipe, tap, pause, and scroll is quietly adjudicated by systems most people never see. Social media algorithms function as invisible editors, deciding what deserves attention and what fades into obscurity. They do not sleep. They do not editorialize in the human sense. Yet they shape public discourse with a precision and scale no newsroom could ever match.

What appears spontaneous is anything but. Behind each feed lies an intricate lattice of probabilistic judgments, continuously recalibrated in real time.

Why Algorithms Became the Most Powerful Media Gatekeepers

Traditional media once relied on editors, producers, and institutional values to curate information. Social platforms replaced those gatekeepers with mathematical models optimized for scale. Algorithms became dominant not because they were more ethical or accurate, but because they were faster, cheaper, and infinitely adaptable.

In an attention economy, whoever controls distribution controls influence. Algorithms inherited that role by default.

What People Think Algorithms Do vs. What They Actually Do

The popular belief is that algorithms simply show users what they like. The reality is more complicated. These systems are not designed to please; they are designed to predict.

They forecast which piece of content is most likely to hold attention for the longest possible time, given a specific user, at a specific moment, in a specific context.

The Core Goal No One Talks About: Predicting Human Attention

At their core, algorithms are anticipatory machines. They attempt to model human cognition, emotion, and impulse through data proxies. The question is never “Is this content good?”

The real question is: “Will this person stop scrolling for this?”

From Chronological Feeds to Curated Reality

Chronological feeds favored recency. Algorithmic feeds favor relevance as defined by behavior. This shift quietly transformed social media from a stream of updates into a personalized reality tunnel.

Time stopped being the organizing principle. Probability took over.

The Data You Know Is Tracked — and the Data You Don’t

Likes, shares, and follows are the obvious signals. Less obvious are hover duration, replays, rewinds, muted views, screenshot behavior, and abandonment timing.

Every micro-interaction becomes a data point. Silence itself is information.

Engagement Is Not Just Likes, It’s Behavior Patterns

A like is a blunt instrument. Behavioral patterns are precision tools. Algorithms care more about how someone behaves across hundreds of sessions than how they react to a single post.

Consistency, deviation, and escalation matter more than enthusiasm.

Dwell Time: The Metric That Quietly Outranks Everything Else

Dwell time measures how long attention lingers. It is one of the most powerful yet least discussed metrics. Content that holds users without prompting overt interaction often outperforms content that provokes quick reactions.

Stillness can be louder than applause.

Why Pauses, Hesitations, and Scroll Speed Matter

Scrolling speed reveals interest gradients. A slowdown signals cognitive engagement. A pause suggests contemplation. Algorithms interpret these micro-moments as indicators of value, even when no button is pressed.

Attention leaves fingerprints.

The Role of Negative Emotions in Algorithmic Amplification

Anger, outrage, and anxiety generate sustained attention. Algorithms do not understand morality, but they understand retention. Content that elicits strong negative emotions often keeps users engaged longer, even if the experience is unpleasant.

The system optimizes for duration, not well-being.

Why Controversy Often Outperforms Quality

Quality is subjective. Controversy is measurable. Disagreement produces comments, replies, revisits, and shares. These secondary behaviors amplify reach, regardless of accuracy or nuance.

Polarization becomes a performance metric.

Algorithmic Memory: How Past Behavior Haunts Future Feeds

Algorithms remember.Past interests weigh heavily in future recommendations, creating inertia that is difficult to reverse.

What once intrigued can quietly become a cage.

Feedback Loops and the Illusion of Choice

Users shape the algorithm, and the algorithm shapes users. This recursive loop creates the illusion of autonomous choice while steadily narrowing exposure.

Freedom exists, but it is statistically guided.

Shadow Signals Platforms Rarely Publicize

Not all signals are disclosed. Platform-specific heuristics, experimental weights, and undisclosed penalties influence distribution. Some signals are proprietary by necessity. Others remain opaque by design.

Opacity preserves competitive advantage.

The Difference Between Personalization and Manipulation

Personalization tailors content to preferences. Manipulation nudges behavior toward outcomes beneficial to the platform. The line between the two is thin, fluid, and often invisible.

Intent matters, but impact matters more.

Why Two People Never See the Same Platform

Feeds are not shared experiences. They are individualized projections shaped by behavior, location, device, time of day, and social graph.

The platform is singular. The experience is not.

Content Freshness vs. Content Relevance

Fresh content introduces novelty. Relevant content sustains engagement. Algorithms constantly negotiate between the two, testing whether recency can outperform familiarity.

Sometimes old ideas win simply because they feel safe.

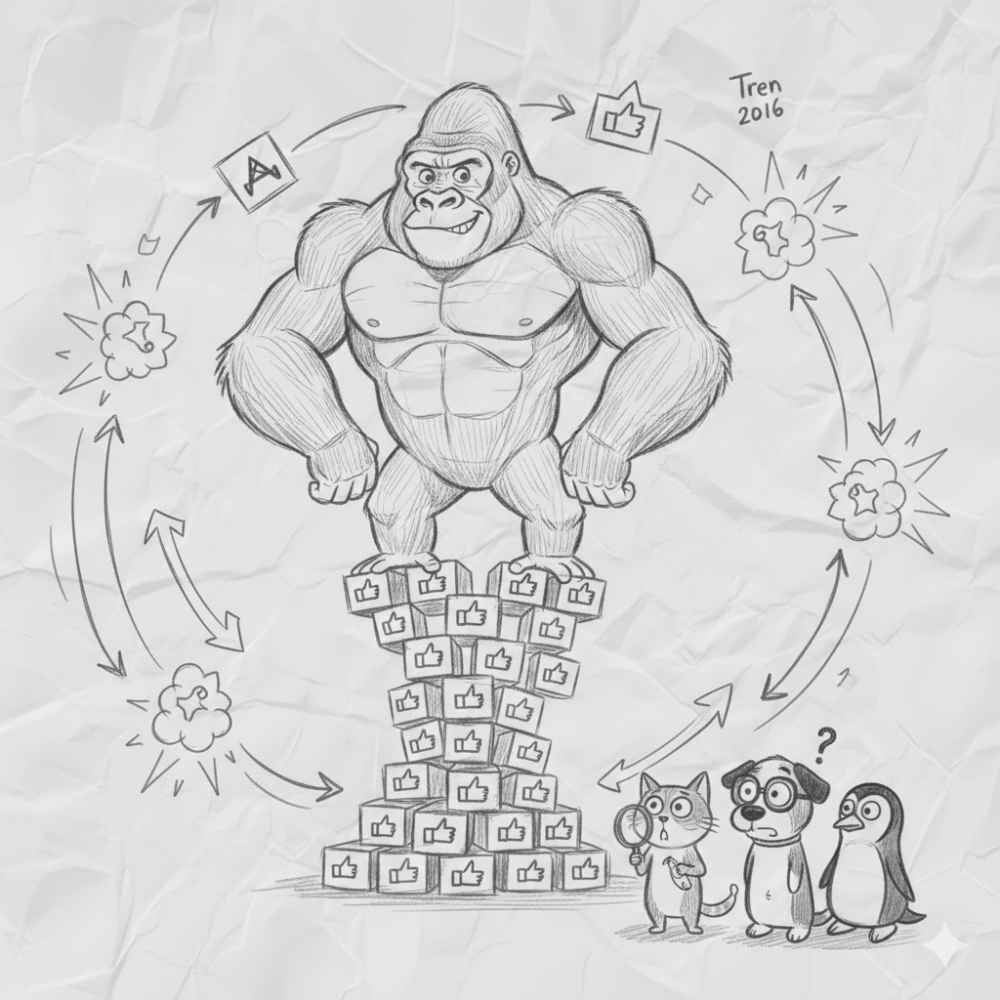

How Algorithms Test Content Before Fully Releasing It

Before wide distribution, content is often shown to a small, representative audience. Performance during this trial phase determines whether reach expands or contracts.

This is not censorship. It is probabilistic triage.

The “Small Batch” Distribution Phase Explained

Small batch testing allows algorithms to measure early signals without risking feed degradation. Weak performance leads to quiet disappearance. Strong performance triggers exponential exposure.

Virality is rarely instant. It is approved.

Why Some Posts Die Instantly While Others Explode Later

Timing, audience state, competing content, and signal noise all influence early performance. Some posts fail the initial test but resurface later when contextual conditions change.

Dormancy is not always death.

Algorithmic Bias Is Often Accidental, Not Intentional

Bias frequently emerges from data imbalance rather than deliberate design. When certain behaviors dominate datasets, algorithms reinforce them unintentionally.

Neutral math can still produce skewed outcomes.

Why Algorithms Struggle With Context and Nuance

Algorithms excel at pattern recognition, not comprehension. Sarcasm, irony, and cultural subtext often escape accurate classification.

Meaning is human. Patterns are not.

The Quiet Power of Comment Sections

Comments extend lifespan. They signal relevance and provoke return visits. A post with an active comment section often outperforms one with passive approval.

Conversation fuels circulation.

Why Replies Often Matter More Than Original Posts

Replies create dialogue chains. They multiply interaction points and keep users anchored within a single content node.

The aftershock can be stronger than the initial impact.

The Hidden Penalty of Posting Too Often

Excessive posting can dilute engagement signals. When audiences stop responding, algorithms infer declining relevance.

Silence between posts can sometimes increase weight.

Consistency Myths Platforms Rarely Correct

Consistency matters, but not mechanically. Posting frequency without audience resonance leads to diminishing returns.

Predictability without value becomes noise.

Why Platform Updates Feel Random but Aren’t

Algorithm updates often reflect shifts in business priorities: monetization strategies, regulatory pressure, or competitive positioning.

What changes feels technical. The reason rarely is.

Algorithm Changes as Business Decisions, Not Technical Ones

Behind every update lies an economic calculus. Ad inventory, user retention, and growth metrics dictate design choices more than engineering curiosity.

Code follows commerce.

The Economic Incentives Behind Feed Design

Feeds are optimized for revenue stability. Attention translates into advertising value. Longer sessions mean more opportunities to monetize presence.

Engagement is not the product. Attention is.

Why “Beating the Algorithm” Is the Wrong Goal

Algorithms are not opponents. They are mirrors reflecting user behavior at scale. Attempts to exploit loopholes rarely endure.

Adaptation outlasts optimization tricks.

Working With Algorithms Instead of Against Them

Content that respects audience intent, delivers clarity, and sustains attention aligns naturally with algorithmic priorities.

Authenticity scales better than manipulation.

What Transparency Would Actually Look Like

True transparency would involve clear explanations of ranking priorities, data usage boundaries, and systemic trade-offs. It would also require acknowledging limitations.

Opacity is easier. Clarity is riskier.

The Future of Algorithms and Human Attention

As models grow more sophisticated, prediction will become more granular. The challenge will not be accuracy, but restraint.

The question is no longer whether attention can be modeled. It is whether it should be endlessly optimized.

Conclusion: Understanding the System That Shapes What We See

Social media algorithms are neither villains nor saviors. They are instruments, shaped by incentives, data, and design choices. Understanding how they work does not eliminate their influence, but it restores a measure of agency.

In a world mediated by invisible editors, awareness becomes the most valuable form of literacy.